Hi,

I followed the instructions here Model-References/PyTorch/computer_vision/segmentation/Unet at 1.8.0 · HabanaAI/Model-References · GitHub for Unet2D training with 8 Gaudis

Running this command

$PYTHON -u main.py --results /tmp/Unet/results/fold_0 --task 1 --logname res_log

–fold 0 --hpus 8 --gpus 0 --data /data/01_2d --seed 123 --num_workers 8

–affinity disabled --norm instance --dim 2 --optimizer fusedadamw --exec_mode train

–learning_rate 0.001 --hmp --hmp-bf16 ./config/ops_bf16_unet.txt

–hmp-fp32 ./config/ops_fp32_unet.txt --deep_supervision --batch_size 64

–val_batch_size 64 --min_epochs 30 --max_epochs 10000 --train_batches 0 --test_batches 0

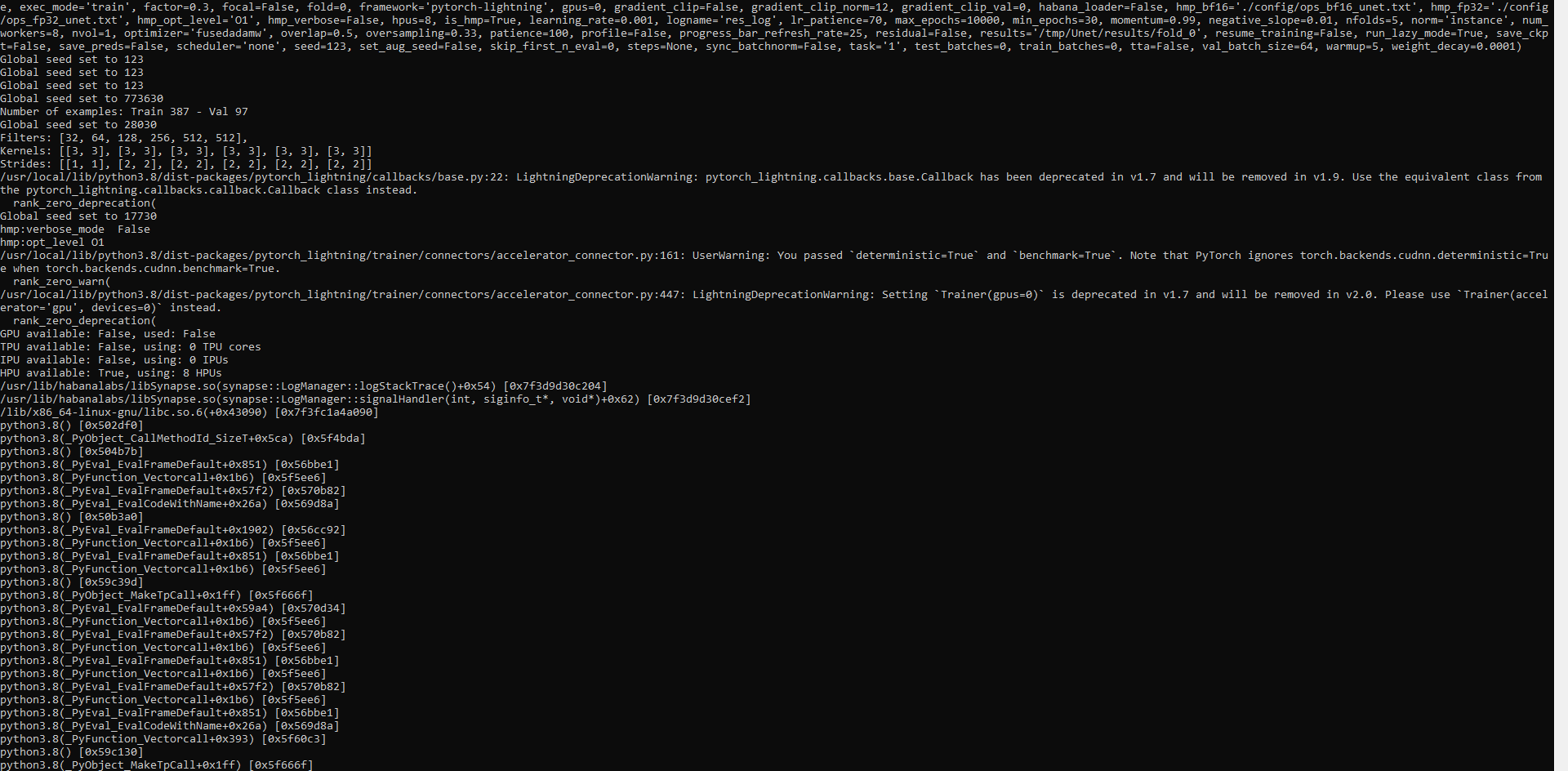

The training crashes. see screenshot