Issue:

We are running inference on a diffusion model. In order to produce an output with a diffusion model, we need to start from a normally distributed input (using torch.randn). We are getting noise with hpu (and not with cpu and gpu). We also saw that if we compute the input iniitialization (randn) on cpu, we get the expected results when moving it then to hpu and persuing the calculations on hpu.

Our assumption is that randn might not produce a proper normal distribution.

Analysis:

import torch

x_hpu = torch.randn([2, 3, 256, 256], device=torch.device(“hpu”)).to(“cpu”)

x_cpu = torch.randn([2, 3, 256, 256], device=torch.device(“cpu”))

to get the histogram

import pandas as pd

data = pd.Series(x.flatten().tolist())

data.hist()

data.std()

to get the qq plot

import statsmodels.api as sm

import pylab

sm.qqplot(data, line=‘45’)

pylab.show()

The calculations of the expectations below might also show that we dont get what we expect for a normal distribution (see here: integration - Expectation of a Standard Normal Random Variable - Mathematics Stack Exchange)

Hi @estellea, to be clear, are you running Inference on Gaudi, correct? can you share the model to allow us to help reproduce this?

We made those changes to cfg_sample to enable hpu support

As well as :

commenting out “convert_weights” in CLIP/clip/model.py:429 to go full fp32

Hi @Greg_S ,

Yes exactly: inference on Gaudi

The model can be found here : GitHub - crowsonkb/v-diffusion-pytorch: v objective diffusion inference code for PyTorch.

Using this command line:

./cfg_sample.py “red apple”:5 -n 1 -bs 4 --seed 0 --hpu

You will see very different outputs if you run it on gpu/cpu versus hpu.

If you only change the function run_all in cfg_sample with :

x_cpu = torch.randn([n, 3, side_y, side_x], device=torch.device(“cpu”))

x = x_cpu.to(“hpu”)

you will see comparable results than on gpu/hpu

thank you @estellea, we’re reviewing this now

Hi @estellea

Thanks for pointing out the issue. We will update here once its fixed.

In the meanwhile, maybe you can use the box muller transform to generate/simulate gaussian distribution from uniform distribution.

Sample code:

import torch

import matplotlib.pyplot as plt

import math

device = 'hpu'

if device == 'hpu':

from habana_frameworks.torch.utils.library_loader import load_habana_module

load_habana_module()

if False:

x = torch.randn([2, 3, 256, 256], device=torch.device("cpu"))

else:

u1 = torch.rand([2, 3, 256, 256]).to(device)

u2 = torch.rand([2, 3, 256, 256]).to(device)

z1 = torch.sqrt(-2 * torch.log(u1)) * torch.cos(2 * math.pi * u2)

x = z1

import pdb; pdb.set_trace()

x = x.to('cpu')

import pandas as pd

data = pd.Series(x.flatten().tolist())

ax = data.hist(bins=100)

data.std()

fig = ax.get_figure()

fig.savefig('test_hist.pdf')

import statsmodels.api as sm

import pylab

sm.qqplot(data, line='45')

plt.savefig('testplot.png')

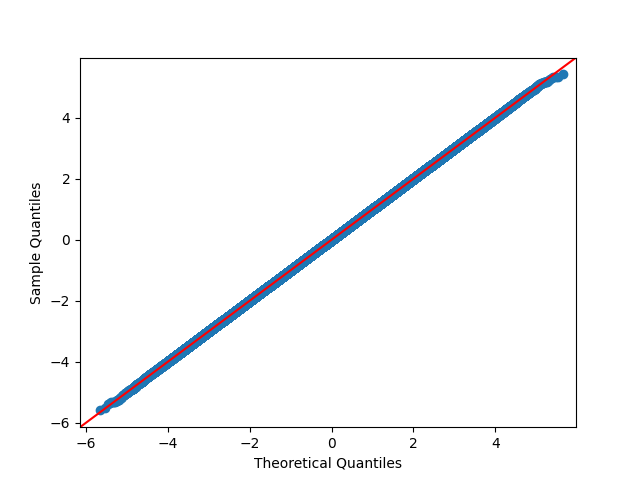

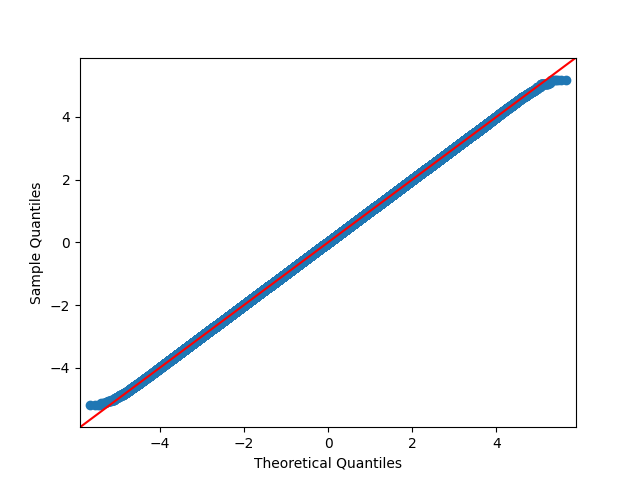

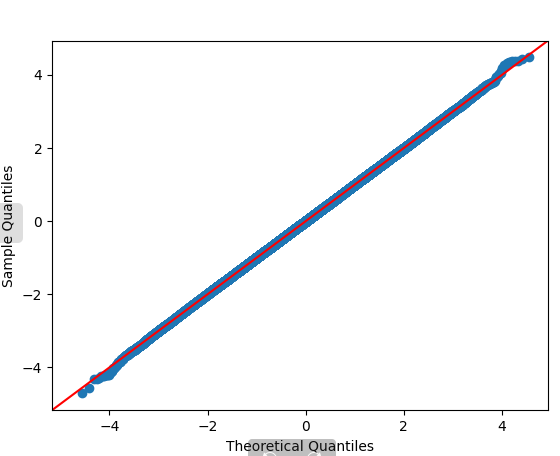

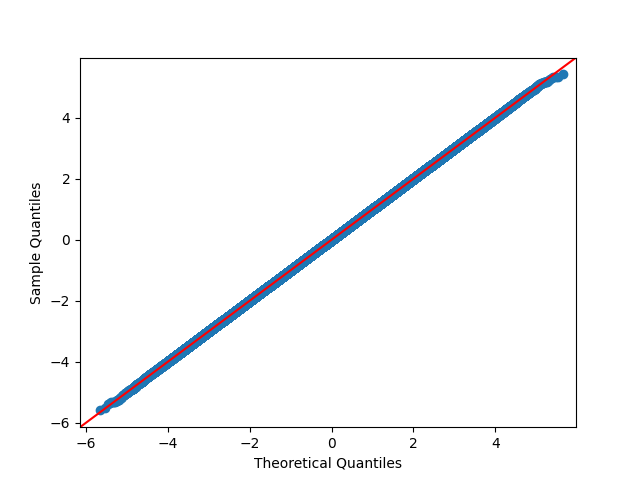

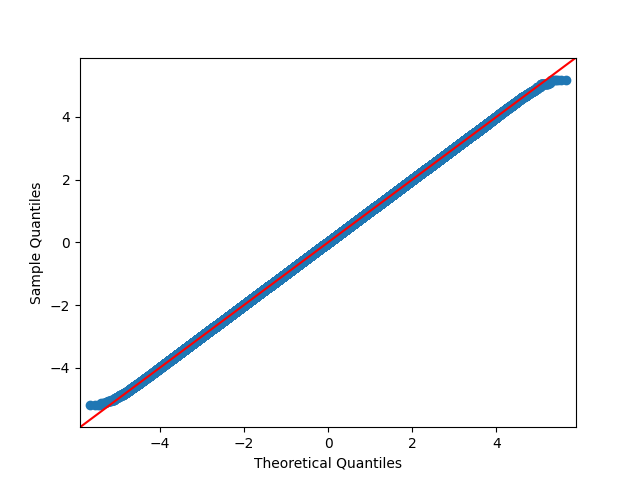

I see this qq plot and histogram:

Let us know if this unblocks you.

Thanks

Thank you @Sayantan_S this is what we did

2 Likes

Hi!

Any update on this thread?

Thank you!

Estelle

@estellea

Could you please try the newly released 1.7 and see if it works for you

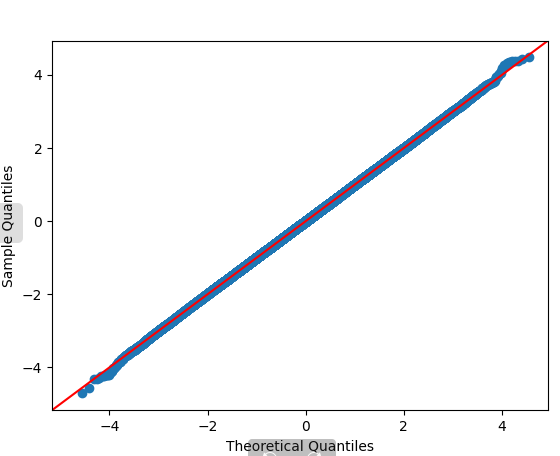

Attached here are the cpu vs hpu QQ plots for 1.7 release